Humans and AI are shaking hands

If every time someone mentioned AI in our surroundings a bell went off, we’d be treated to a constant symphony of ding, ding, ding!

But are those enthusiastic dings just a noise? Far from it.

A Harvard Kennedy School professor confirms that equipping employees with Generative AI tools can amplify productivity by about 25%. So, it’s no surprise that McKinsey’s 2024 survey revealed that 72% of businesses use AI in at least one function, and 50% in two or more.

Venture capitalists are equally captivated. In the first quarter of 2024, AI startups raised $4.7 billion in February alone, more than double the $2.1 billion in February 2023.

Though a fresh field, its growth is phenomenal: generative videos and images are evolving into immersive virtual game worlds, new language models now demonstrate step-by-step problem-solving. Even the 2024 Nobel Prize in Chemistry was awarded to scientists who made AI system solve a 50-year-old challenge in science.

But amid the escalating global race in AI innovation now, forecasting the path of AI evolution might appear challenging. Yet, how could we resist the plunge?

Meet the expert

To peek into what’s coming, we sat down with Alexey Karankevich, AI Innovation Lead at Oxagile, who shared the most thrilling upcoming AI and machine learning trends of 2025.

Expert’s predictions for AI in 2025

#1 Cost optimization

From the energy consumption of running models to the computational resources required for training, the focus on cost optimization is becoming one of major AI trends in 2025.

The reason? AI models are incredibly resource intensive. To put it simply, a single prompt on advanced models like ChatGPT can use as much energy as a light bulb left on for about an hour – seems like too many deep questions for ChatGPT, and we might end up needing a few more candles around.

It’s encouraging to see that this challenge is receiving attention, and a notable example supporting this trend is the recent $500 million private sector investment announced by President Donald Trump to optimize AI infrastructure.

But what exactly does cost optimization in AI entail?

At its core, cost optimization means refining every stage of machine learning operations, from data acquisition and storage to model deployment, maintenance and improving the infrastructure.

Firstly, it closely interconnects with MLOps, and in 2025, engineers will most likely emphasize:

- Data efficiency

Addressing key questions like “How much data is needed?”, “Where is it sourced from?”, “How can it be stored cost-effectively?” to ensure AI models do not process unnecessary data, reducing storage and computing costs will be key.

- Automating AI pipelines

Integrating scalable, reproducible, and optimized for efficiency end-to-end machine learning pipelines that handle data ingestion, model training, deployment, and monitoring with minimal human intervention will be crucial.

- Efficient cloud resource management

Serverless computing and multi-cloud strategies are gaining traction, allowing companies to deploy models without maintaining dedicated infrastructure. The setup and optimization of these strategies once again fall under the purview of MLOps engineers.

Secondly, since cost and resource optimization are also a concern of Data Science, 2025 will also see increased emphasis on model optimization — particularly through distillation — technique used to transfer knowledge from a larger, more complex model to a smaller, more efficient model to retain most of the predictive power of the larger model while reducing computational costs and improving efficiency.

Why is this important? Larger AI models require extensive computing power, often relying on cloud-based processing. However, optimized versions of these models can be run locally on edge devices — such as smartphones or IoT devices — reducing latency and enhancing efficiency. A great example of this is Apple’s Intelligent System, which runs AI models directly on mobile devices rather than depending entirely on cloud servers.

As we forge ahead, the evolution of many other existing optimization methods is a given. Beyond the well-known techniques like quantization (which reduces data volume to speed up performance) and pruning (removing non-essential parts of the model), there could be noteworthy progress here, for instance, in optimizing transformer-based models widely used in neural networks designed to understand and generate text.

#2 Multimodal models

The AI industry has mastered text processing, but handling images and video still lags behind.

However, we have reasons to believe that we’ll see more advancements in this area over the next year, greatly supported by the development of multimodal AI models. These models are capable of processing and integrating text, images, audio, video, and other types of data within a single architecture. Unlike traditional AI models that specialize in just one type of data (e.g., text-only language models like Transformer or image classifiers like ResNet), multimodal models combine these capabilities for more advanced and efficient AI systems.

They are particularly important because one of the biggest costs in AI development is data preprocessing. Cleaning, labeling, and formatting data for machine learning models is expensive and time-consuming. Multimodal models, in turn, allow the use of raw data in any form without extensive preprocessing and without conversion. Additionally, since they can process all types of data, these models enable companies to leverage more data sources than ever before. This allows for training of larger models with broader capabilities, as diverse training data leads to improved accuracy and generalization.

Major players like OpenAI, Google, and Meta are heavily investing in multimodal AI, making it clear that these models will dominate 2025. Recent breakthroughs highlight this shift — OpenAI’s SORA generates realistic videos from text, Meta’s SeamlessM4T translates speech and text in real time, Google DeepMind’s Gemini 1.5 processes and integrates text, images, code, and even videos.

However, the journey for generative video isn’t entirely smooth for the majority of other solutions, and significant improvements are expected by 2025 to further refine their quality and capabilities.

Optimize your video generation pipeline with AI

Produce AI-driven, narrative-rich video ads adjusted in real-time for maximum efficacy.

#3 Agent-based AI systems

In the upcoming year, businesses will begin to discern their true needs when it comes to AI, shedding the unnecessary layers that came with the initial excitement. The AI and machine learning landscape will mature, and with it, a more precise and practical understanding of these technologies will emerge.

In 2025, many companies and startups will probably move away from superficial AI applications — AI-powered coffee machine advisors suggesting flavors will likely be gone with the grind — and instead there will be focus on automation solutions that drive tangible business value.

Mature businesses will recognize the potential of automation and will leverage solutions to optimize their processes and reduce costs. Many will start utilizing their in-house technologies and data infrastructure to achieve these goals. This approach could be an attractive business model, as others might want to adopt these successful automation processes. For example, if a company automates its supply chain and achieves significant improvements, other businesses may want to replicate that success, even if they have fewer resources or less expertise.

This trend could lead to more companies sharing their innovations in automation, allowing others to integrate these advancements into their own systems.

Agent-based AI systems are set to play a central role in this trend. These intelligent agents can operate autonomously, make decisions, and optimize processes across various business domains — from small businesses to large enterprises. AI agents can lead to more efficient problem-solving and better allocation of resources, as they can handle tasks that require advanced cognitive abilities and adaptability. Besides, they ensure AI no longer operates in isolated silos but rather interacts with its environment, comprehends it, and can reflect on and adjust its internal thought processes.

Built on frameworks like LangChain, DSPy, and cloud services — they will form the backbone of modern automation, making AI more adaptive, scalable, and cost-effective for businesses.

However, enabling such deep AI integrations will require more than just surface-level automation. It will demand strong technical foundations and system-wide AI orchestration. Such systems might take various forms, from low-code API-with-contract editors like ChatbotBuilder to more advanced frameworks like MCP (Model Context Protocol).

#4 Federated learning

This is a distributed approach to training where multiple users, each with their own data chunks, contribute to building a robust model. Why is this particularly noteworthy?

Currently, there’s a significant challenge: we’ve almost exhausted readily available data. So where do we find new? Traditionally, we rely on public datasets from centralized sources like Google, Facebook, Instagram, etc. However, a substantial amount of data is generated locally by individuals or stored on servers and databases that are not publicly accessible.

For example, some financial or healthcare institutions could share their knowledge without sharing any personal data and still reap the benefits of building a global model together.

Sharing part of this information can help others learn and provide feedback on our knowledge. It’s a win-win scenario that fosters collective growth and knowledge sharing. And while the unstructured nature of certain data like this poses challenges, the potential of federated learning is still incredibly promising.

And the best part is that with federated learning, it’s possible to move the training procedure to the client side instead of transferring the client’s data to a central server. This way, the data remains private, and only the model updates are sent to the central server. This approach ensures privacy, security, and efficient use of local data in practically any sphere.

#5 Technology re-evaluation and Explainable AI

It is anticipated that most stakeholders in 2025 will move away from the exaggerated assertions that AI will replace human roles entirely.

The narrative surrounding AI’s role will move away from the exaggerated claims of AI replacing human decision-making. Instead, it will be acknowledged as an extremely powerful instrument — our superpower, but not our substitute.

Another prominent part of this current trend in AI is Explainable Artificial Intelligence, which aims to enable users, stakeholders and institutions to understand and trust the results and outputs created by machine learning algorithms.

Naturally, in tightly controlled sectors, errors can have serious consequences — a misdiagnosis in healthcare, a wrongly rejected insurance claim, or a faulty quality check in manufacturing can all lead to financial, legal, or even life-threatening issues. That is why there’s a growing demand for understandable explanations about any AI decision for all parties involved. But explanations aren’t one-size-fits-all: for example, explanations for patients will differ from those for doctors and those for systems.

And this is also where regulatory affairs come in — AI developers must clearly prove that their models work correctly and don’t discriminate or cause harm.

And even after an AI system is approved and launched, it must go through post-market surveillance, meaning it’s continuously monitored for errors, biases, or unexpected behaviors. If problems arise, companies must fix them and update their systems to comply with evolving regulations.

However, there is currently no unified framework for providing these explanations, despite the existence of various approaches to explain models and data in general. The more complex and extensive internals are, the harder it becomes to explain. Additionally, these explanations often involve complex mathematics, making them challenging to understand for those without a strong mathematical background.

But as AI adoption grows, the demand for transparent and accountable systems will only increase and Explainable AI, supported by strong regulatory oversight and ongoing post-market surveillance, will be essential to ensuring that AI remains trustworthy, fair, and safe for everyone.

However, these elements are only one part of the equation. We will likely progress to a higher level, where we determine which decisions the model should handle, which decisions should remain unmade, and which should be made exclusively by humans. Most importantly, we will focus on how all these elements interact with each other.

More AI tech trends to watch in 2025

Deepfakes

Deepfakes, a combination of “deep learning” and “fake”, refer to images, videos, or audio created or altered using AI tools. These can depict either real or entirely fictional people.

Over the years, deepfakes have contributed to a surge of misinformation online and there have been significant real-life incidents involving deepfakes causing serious issues. For instance, in 2023, an employee from a major international company was tricked into transferring $25 million to fraudsters after a convincing video call with someone posing as their financial director.

So in 2025, there will be a concerted effort to develop methods for accurately determining whether content is AI-generated or not. This will involve sophisticated techniques and tools to analyze the authenticity of content, ensuring that both the message and the medium are verified with precision. As these methods advance, we can expect a significant emphasis on fact-checking to identify and manage AI-generated content, making it a crucial aspect of Generative AI trends.

Real-time interaction and speech integration

Real-time interaction and speech integration involve systems that can understand and respond to human speech and actions instantaneously. This technology is used in virtual assistants, customer service bots, and interactive applications. These systems leverage natural language processing (NLP) and speech recognition to provide seamless and intuitive user experiences.

Soon, we can expect systems to integrate multimodal interfaces, combining speech, visuals, and gestures to create richer, more natural interactions.

As deepfake technology continues to advance, conversational AI will play a crucial role in detecting and preventing fraud by analyzing speech patterns and visual cues to flag potential inconsistencies.

AI and marketing

In 2025, AI is poised to significantly transform marketing strategies. We’ll see major AI marketing trends focusing on analyzing and predicting customer behavior, streamlining the process of evaluating marketing campaign efficiency, brand reputation management and ensuring a positive brand image. The key current trends in AI include:

- Hyper-personalization

In 2025, AI personalization will create highly individualized experiences at scale. Physical ad campaigns will adjust based on recent purchases, and data from online purchases will power real-life ads. Hyper-personalization will also extend to data-driven marketing, with mobile notifications offering timely deals based on daily routines.

- Predictive analytics for targeted advertising

AI-driven predictive analytics made waves in 2024 and aren’t showing any signs of slowing down in 2025. These powerful AI systems process vast amounts of data from multiple sources, forecasting customer behavior, identifying new market opportunities, and predicting changes in consumer sentiment. They uncover patterns that are difficult to detect manually, taking into account factors like weather, local events, and historical purchasing data. This enables real-time, dynamic strategies that adapt to changing conditions.

- Data-driven AI readiness

In 2025, marketing teams will focus on breaking down data silos and creating data-driven cultures to prepare their data for AI tools. This will enable marketers to holistically analyze performance and trends, making data integral to their decisions.

- Ad placement control and DAI

Brands are increasingly seeking more control over the placement of their ads to ensure both brand safety and relevance. AI-powered contextual targeting is advancing beyond traditional keyword-based methods, employing natural language processing (NLP) and sentiment analysis to place ads in highly relevant, brand-safe environments.

In 2025, we can expect AI-powered, hyper-personalized Dynamic Ad Insertion to expand into CTV, live sports streaming, and gaming. This technology will dynamically place the most relevant ads for each user at the moment of playback, creating adaptive ad experiences tailored to real-time viewer sentiment.

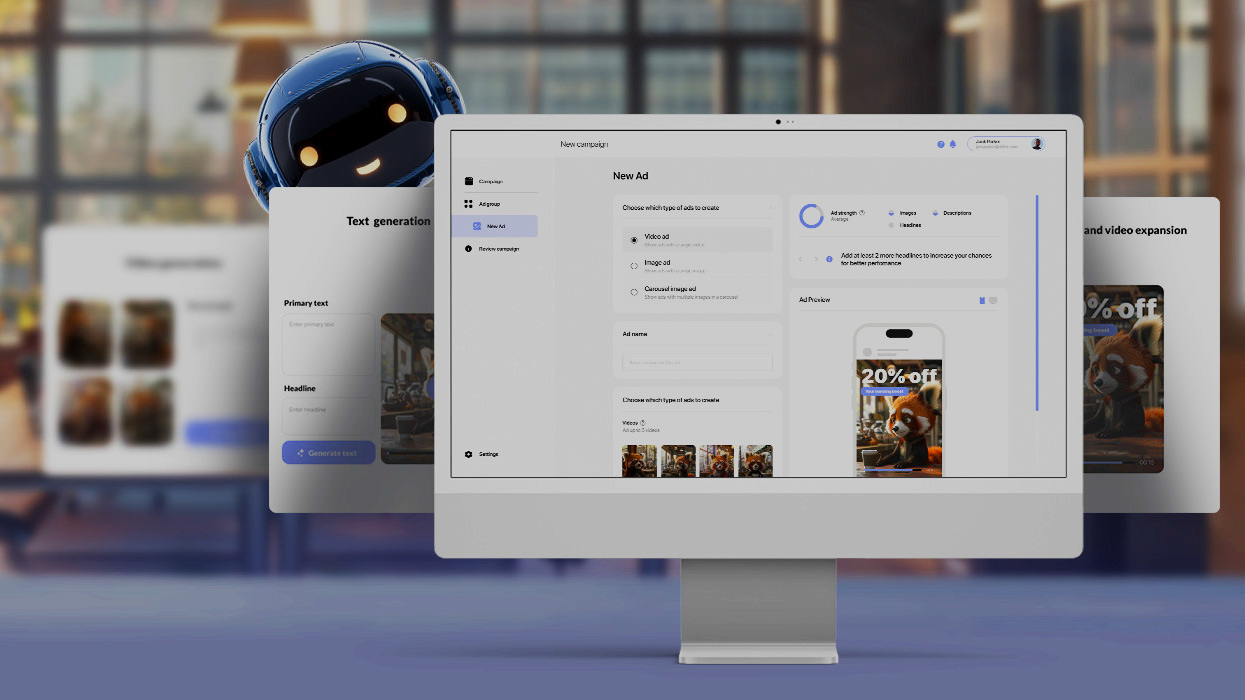

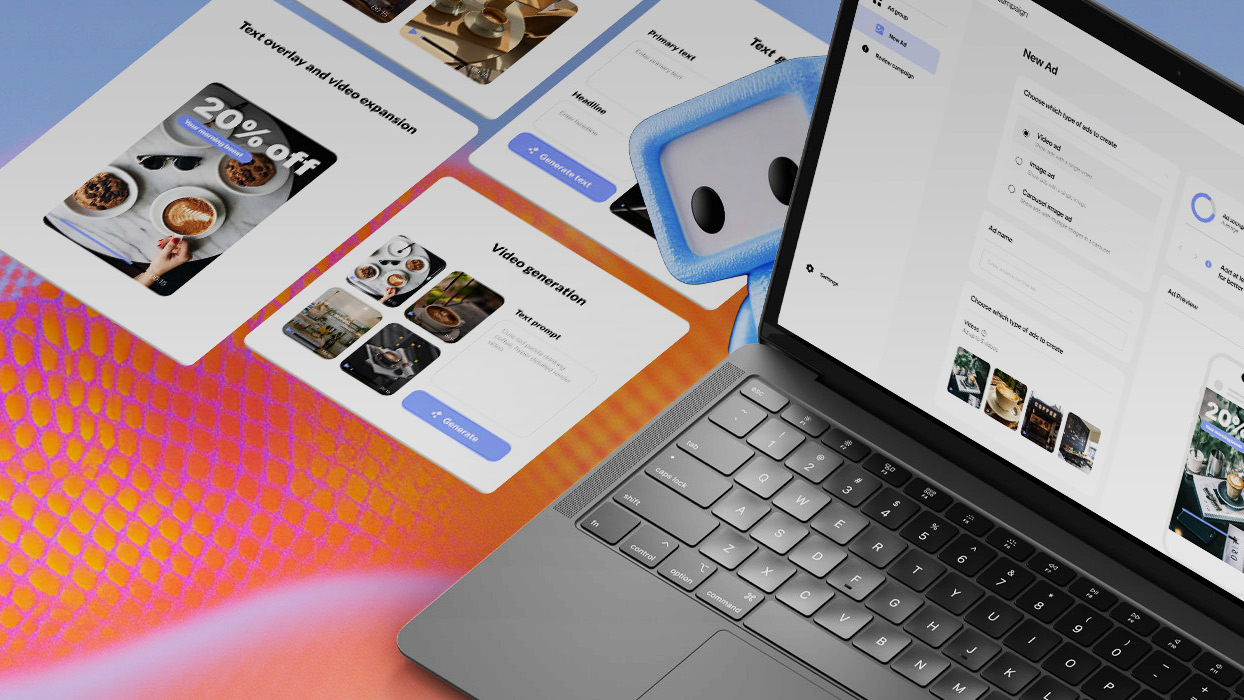

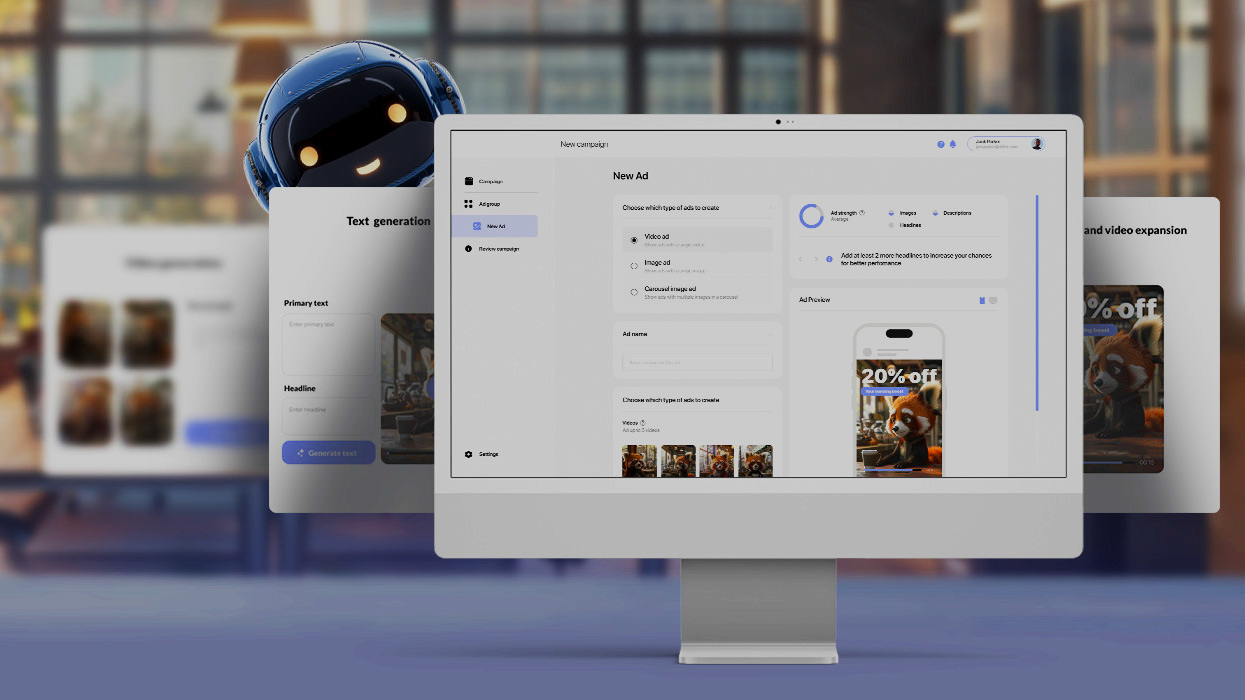

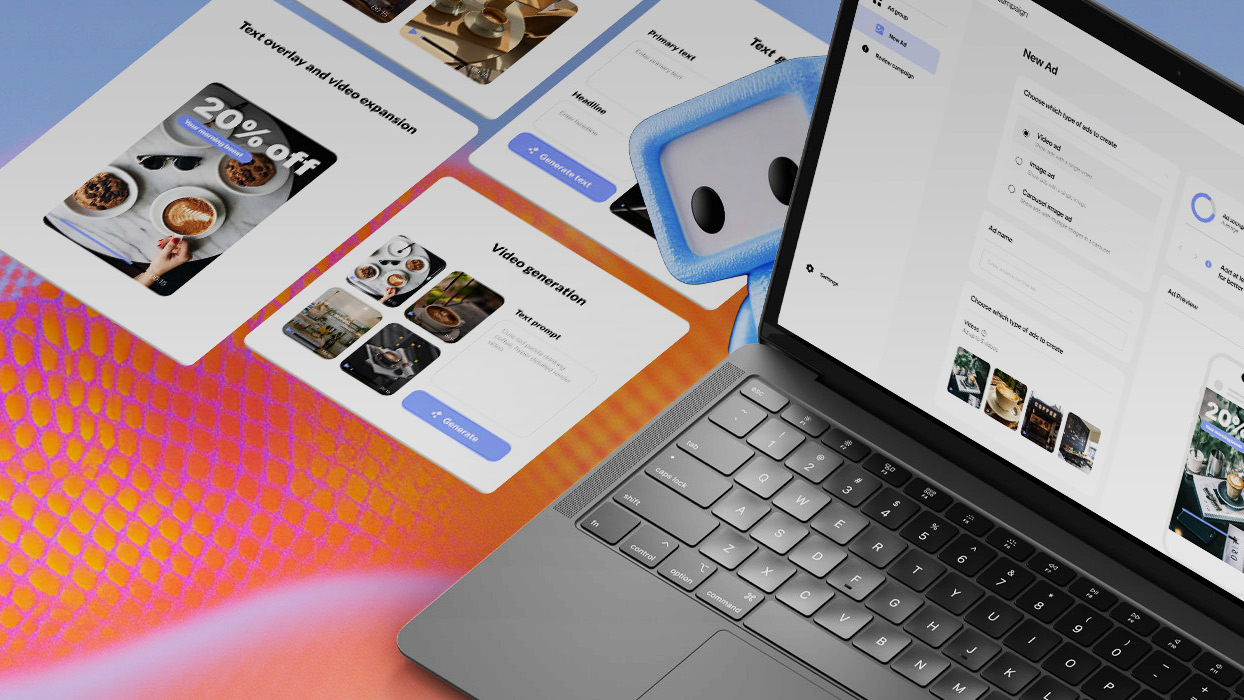

Case in point: Simplifying digital advertising with AI-powered ad generation

Upgrading ad campaigns for marketing agencies tired of outdated methods and uncertain audience responses.

- Website crawling and campaign launch: AI extracts key topics and keywords, setting up campaign parameters.

- Analyzing data: Evaluates historical data and past campaigns.

- Continual adjustments: Analyzes audience responses and refines target group parameters.

- Bid optimization: Uses real-time data for accurate budget allocation and meaningful reports.

Feeling the urge to tap into the magic of AI?

Which tool is the best one? How do you integrate it seamlessly into your existing processes? But what comes next after implementation?

These are the questions we love answering. Our goal is to provide clear, actionable insights on AI tools, implementation, and integration to guide you in making the best choice.